Augmented creativity

Our working tools will increasingly incorporate artificial intelligence, which is progressing at an astonishing speed. Here is what you need to know about the currently most popular tools, which are creating a great debate on how creative jobs will change and what the balance between humans and machines will be in the future.

By Lorenzo Capitani | On PRINTlovers 96

Artificial intelligence is progressing at an astonishing speed, and its use is spreading like wildfire: so it seems from the much talk that has been going on for some time now. It must be the great stir caused by the release of public betas of tools such as ChatGPT, used by students to do their homework and by news sites to write news, or of image generators such as Dall-E that have invaded the Internet and divided the world between enthusiasts and catastrophists who see in this technology the imminent invasion of machines. 'The problem,' writes Stefano Nasetti in his 'The Dark Side of the Moon', 'is not the technology, but the use made of it. Everything entails risks; the important thing is to be aware of them and assess whether the price we pay (minus privacy) is adequate to what we receive in return.'

Let's face it, our daily lives are already permeated by AI more than we realise, demonstrating how effective this technology is and how it supports our activities. But what is an Artificial Intelligence? 'It is an automated system capable of making decisions and performing tasks without human intervention. AI systems use algorithms and data to make decisions and can learn from their experiences. This is done through machine learning, which involves using large data sets to train systems to recognise patterns, understand context and make decisions.' This is the definition given to us by the ChatGPT bot and sums up all the complexity of this technology, which differs from any other human invention precisely because of the character of autonomy and self-learning that underlies the word 'intelligence'. ChatGPT - developed by OpenAI, a company in Elon Musk's constellation - is, according to experts, currently one of the most powerful AI engines, much cited and used today precisely because it is capable, among other things, of creating scientifically precise, formally correct, semantically sensible, and one might even say surprisingly human texts. It is no coincidence that thanks to this ability, ChatGPT reached 100 million registered users in a single month: TikTok, which held the record, took a whole nine.

Beyond possible scenarios

For the inventors of artificial intelligence, it was initially a matter of going beyond computer science and computational science - based on the computational capacity of computers for which it is sufficient to increase power - and to achieve entities capable of reasoning, i.e. of solving complex problems from knowledge of data and laws governing certain phenomena, such as proving a theorem by knowing the principles of mathematics. Until then, any machine (even those imagined by Asimov) relied on instructions given at the time of programming that were so complex as to give the illusion of intelligence. When the IBM Deep Blue computer won at chess against Russian world champion Gary Kasparov in 1996, it 'simply' had the computational capacity to systematically evaluate 200 million possible moves per second and to calculate all possibilities in real-time, choosing the most correct one. The concept of intelligence comes into play when instructions are no longer given for all possible decision-making forks in the road, but it is the machine that can literally create something that was not there before and give a representation of it in natural language or in any case comprehensible to humans: to do this, it relies on programming, but above all on experience, i.e. on memorising and learning data, on the ability to extract information, assemble it and manipulate it, to the point of deducing and reasoning based on principles of probability, statistics and the economics of the solution. Finally, to be able to understand and express oneself, one must have linguistic skills based on distributional semantics, a branch of computational linguistics that uses large amounts of linguistic data to understand the meaning of words and sentences.

All around us

We used to say that AI is already among us more than it seems, and it is in almost every field where there is some computer processing at a more or less sophisticated level. From navigators to search engines, from customised reels in social networks to advertisements, from translators to bots, from suggestions in e-commerce sites to neural filters in Photoshop, speech recognition, predictive maintenance, facial recognition... and the list could still be very long. In all these cases, there is no longer just simple 'if... else' logic: just as we humans do not just choose one fork in the road at a time, but deduce, abstract, remember, learn, reason, create and act, so do all these AIs. As the philosopher Cosimo Accoto writes in his book Il mondo dato ("The Given World"), this is 'an epochal, not episodic, passage of civilisation'. If I ask Siri or Alexa to play any song, the AI will also take into account what I asked for in the past (if I did), what I listened to and for how long, what advertisers are sponsoring, what others are listening to, my location, and who knows how many variables that we are not even allowed to know because not only are the algorithms strictly top secret, but above all, they are in perpetual evolution, like those used by a human brain, and by now no longer totally controllable. From its release onwards, an AI can process, learn and evolve based on its iterations and experiences. Often, however, we tend to attribute human characteristics to these algorithms, but in reality, there is no understanding and intelligence as we understand it: it is purely statistical calculation. Results are generated probabilistically. As Gary Marcus, Professor Emeritus of Psychology and Neural Sciences at New York University and founder of Geometric Intelligence, a machine learning company, wrote, 'GPT-3 doesn't learn anything about the world, but it does learn a lot about how people use words'.

Generative algorithms

The release of free, public betas of a whole range of AI tools and all the hype around them inviting people to try them out and see their extraordinary creative abilities is no coincidence. As you use a voice assistant, it learns from you. But how does it do it? This is a very complex subject, but let's say that one of the aspects involved is definitely Machine Learning, or the ability to train a model: the more examples (of data) we provide the machine with, the more accurate the model becomes, and the more accurate it becomes, the more efficient predictive models become. Think what capabilities the AI behind Siri has that can recognise the English title of a song pronounced by a non-English speaker who does not pronounce it correctly: yet it understands. Now the big challenge for AI is to accurately understand what it is being asked to do in natural language, which often carries a different meaning from that which can be interpreted based on morphosyntactic structure alone: sarcasm or irony being one example. Some time ago, it was said that machines were not good with irony: try now to make a joke to Siri, to use a pun or to insult her covertly.

Finalised Gamification

We taught them primarily by interacting with them and perhaps having fun swearing at or making fun of them. Just as we train Amazon's, Apple's or Google's AI when we upload our photos to the space they provide for free. Another example of AI at our fingertips is the iPhone software that recognises the faces of people saved in the Photos library: it recognises them over time as they grow or age, but it also recognises flowers, trees, plants, animals, places (an icon on the photo shows the correct recognition) and tells us the name, type, species, breed, gives information and searches the Internet for similar images. We have fun with the machine, and it learns from us correcting its mistakes: when, for example, it does not understand us, and we repeat, when we say that a piece of content does not interest us, when we like or do not like it, when we skip a post or linger, when we follow a suggested path or when we ignore it.

Photoshop's Intelligent Portrait filter gives the possibility of modifying a face based on a series of parameters linked to expressions, age, the position of the head and pupils and the direction of the light; it implies that the software knows how to recognise each of these parts and that it knows how to do so having learnt from all the images that millions of users have processed with the intelligent filters that are captured, processed and shared with Adobe's servers. It is no coincidence that these filters only work if there is connectivity and if there is specific consent to be given in the Privacy section of the programme's Preferences. And this is why there are online versions of almost all image, video and audio processing and cross-format conversion programmes.

According to Fabrizio Falchi, a researcher at the Institute of Information Science and Technology of the CNR in Pisa: 'with rules and classical programming, we cannot teach the machine to recognise a cat. The best way is to define an architecture that can learn and expose the machine to many pictures of animals, explaining to it which are cats and which are not. Autonomously the machine develops the necessary skills to recognise the cat. Like children who learn to recognise a cat, nobody explains exactly how to proceed. They actually see examples and, over time, learn to distinguish a cat from a dog'.

Art is in our eyes, not in the algorithms

This is not necessarily bad because, in this way, AI has reached such levels that it can create products, services and needs and make business models out of them. Adobe itself, by playing with the Smooth Skin filter, has developed an AI that can tell if an image has been edited or if parts of it have been cloned, altered or deleted by looking for changes in primary colours, analysing noise and brightness variations, and being able to recognise authentic artefacts created by cameras. DVLOP and SLR Lounge, specialists in developing presets for Lightroom, released Impossible Things in January. This AI-based photo editor works natively within Lightroom and can automatically enhance photos naturally. According to the developers, the AI, trained on over one million files, 200 different camera models and more than 300 lenses, can recognise and correctly edit every common scenario a photographer faces, including scenes with mixed lighting, low light and high dynamic range.

After giving Dall-E access to its vast image database, Shutterstock implemented Shutterstock.AI, an image generator based on this technology and LG's EXAONE. The creation tool allows the user to start from a series of templates for the most common outputs, from classic photos to social posts, and to create images by giving the AI a real brief; in addition, the predictive engine suggests creative resources that will be successful based on its predictions. In this way, it is possible to obtain immediate information on patterns, images and colours to create campaigns and identify trends as they develop. But where do all the images used by AI come from? Stability AI, for example, a competitor of Dall-E and included in Midjourney and owned by Stable Diffusion, was trained from three massive databases of 2.3 billion images updated to 2020, collected by LAION, a non-profit organisation dedicated to the dissemination of machine learning, which in turn obtained them from Common Crawl, a non-profit organisation that collects billions of web pages every month and releases them as massive datasets: an analysis of a sample of 12 million images revealed that 47% came from just 100 domains, mainly Pinterest and that blogs hosted on wp.com and wordpress.com are a vast data source - as are Smugmug, Blogspot, Flickr, DeviantArt, Wikimedia and Tumblr. On these datasets, however, there are no checks on the uploaded content, but only moderation on the generated files: for instance, adult images uploaded on Tumblr, which on the social network can only be viewed if you are over 18, typically end up in the learning datasets, as do those of war, violence or offence, and contribute to AI learning. This was discovered by the website haveibeentrained.com, which developed an engine to search within the image dataset to find media the author used unauthorisedly. Given AI's learning capacity, who decides what to do with this sensitive content? Who filters them? A suitably trained AI? Who decides whether a piece of content is offensive or violent? And is it so for whom?

Prompt: a dummy with a pair of jeans and a red t-shirt

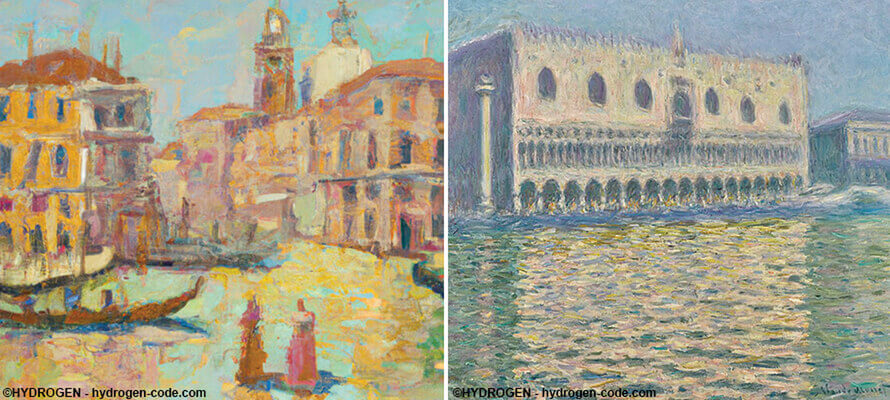

Eric Groza, creative director of the advertising agency TBWA, used the image generation platform Midjourney to hypothesise a collaboration between IKEA and Patagonia: the result is surprising. Something similar was done by Alessio Garbin, Data & Digital Marketing Coordinator at Barilla, who created a series of illustrations for a hypothetical campaign for Mulino Bianco pancakes set against colourful backgrounds. Both examples are published on LinkedIn. And Jason M. Allen, in late 2022, won an award in a competition for emerging artists with "Theatre d'opéra spatial", created entirely with his AI, Midjourney, one of the most exciting and cutting-edge AI software for generating images using textual description, but not the only one. Google has its Imagen, and OpenAI has Dall-E. If we broaden the field further, many algorithmically based image generation and manipulation systems are increasingly 'intelligent'. The use is simple: you enter a textual description, and the AI processes it, generating a possible image that considers the input. In Il Poligrafico 212, Luca Magnoni and Pietro Vito Spina wondered whether AI can catch up with human creativity by putting Midjourney itself to the test on various topics. The result between logos, impressionist and denunciation-style images is incredible and, at the same time, disturbing in the Freudian sense that all these creations generate a sort of irrational disquiet due to their adherence to reality and the way they were generated. At the same time, being the sceptical and suspicious human beings that we are in the face of novelty, we are inclined to look for the error in the Matrix, as Neo puts it, that Montalian' hole in the net' that is undeniably there: it may be that the training sample images, for technical expediency, on average undergo downsampling to 512 x 512 px, it may be that the output itself is as if it were sketchy, often has parts out of place, missing, illogical, yet we obtain results that are absolutely worthy of credibility. One example is the experiment done by French photographers Alexis Gerard and Annabelle Matter, who challenged the AI of Dall-E 2 for Le Figaro Magazine (issue of 11/11/2022) to create images inspired by excerpts from Marcel Proust while they photographed the same places: the images are entirely comparable and sometimes superimposable. Or the experiment done by the YouTube channel Codex Community, which in the video "How to use AI Art and ChatGPT to Create an Insane Web Design" (https://youtu.be/8I3NTE4cn5s), tried to create a website without writing a line of code including graphics.

Humans' augmented' by algorithms

All that remains, then, is to use these AIs without fear of novelty and without the Luddite attitude of those who fear the new and worry that in the near future, we will do without humans for creative work, aware instead of the fact that they are useful, beyond cognitive R&D, as proper support for brainstorming or 'to start a job, prepare a shell to be filled, and take care of mechanical and boring tasks', as Lucio Bragagnolo, a journalist and expert in the Apple world, writes in his QuickLoox blog. You don't write a brief in a text field and out comes the campaign, nor do you describe the picture you want and get it - at least not yet. It is like looking into a kaleidoscope: every time you turn the tube, the suggestion changes, but we are far from getting a finished product. It is more like having someone there to generate endless drafts of the idea you have in your head. All the evidence from those who have done serious experiments says that in any case, an expert is needed, as well as post-production and control - in short, human supervision. It is a different matter when it comes to the results obtained if the AI is subjected to technical-scientific problems or questions: OpenAI's Codex, for example, actually converts instructions given in natural language into Python, or Quizard AI solves physics or mathematics problems, step by step.

Increasingly, our work tools will have artificial intelligence embedded in them. As is demonstrated by DiffusionBee, which installs Stable Diffusion's AI on a Mac with a series of commands that, in text-to-image, allow you to operate on the generated (or uploaded) image by modifying it, creating missing parts, adding or removing elements, and even increasing its resolution. If one does not want to start from one's own prompt (the query command with which one communicates with the AI), there is a library of images already generated by various bots or other users similar to that of ArtHub.ai, which offers a boundless collection of metadata and classified images that can be used as a starting point. Woodwing, too, in its DAM, has introduced AI tools such as facial or object recognition and Google-style reverse image searching and exploits them for heuristics and the creation of smart collections, but also to automate renditions and asset editing operations such as cropping, contouring and isolating a subject from the background. Woodwing's AI or Google's Vision AI also allows for self-tagging, saving us the hours of metadata needed to make our products searchable: it does it with us, according to our instructions and intentions, it does it by following the trends of the moment, translating, considering synonyms, slang and mispronunciations, and it does it by suggesting taxonomies that we might not even have imagined. In short, AI is just a click away; we need to understand it, exploit it and work with it as a team. A DeepMind algorithm recently solved a mathematical problem open for 50 years, and then two Austrian researchers found a more efficient solution. They didn't beat the algorithm; they just collaborated on it.